Fine-tune your LLM

with your custom data

Build your LLM-powered application faster

Use open source LLMs or GenerativeAI models

Fine-tune on demand on your data

Benefit from HPC and serverless GPUs

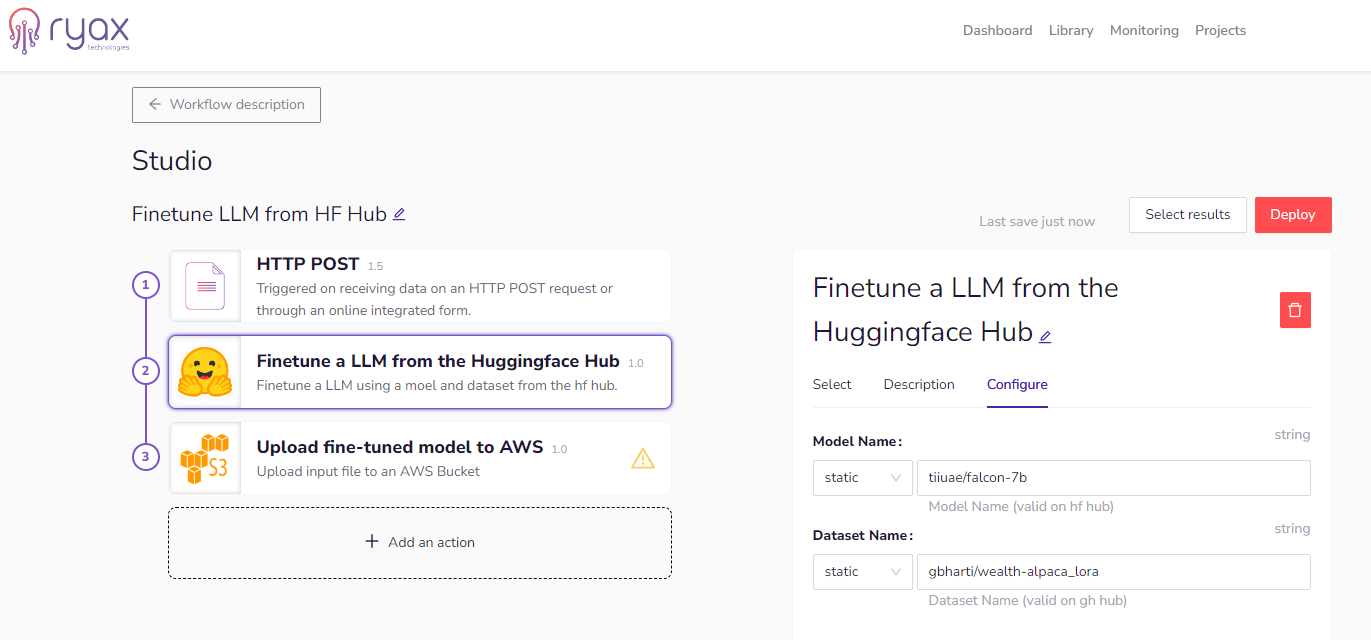

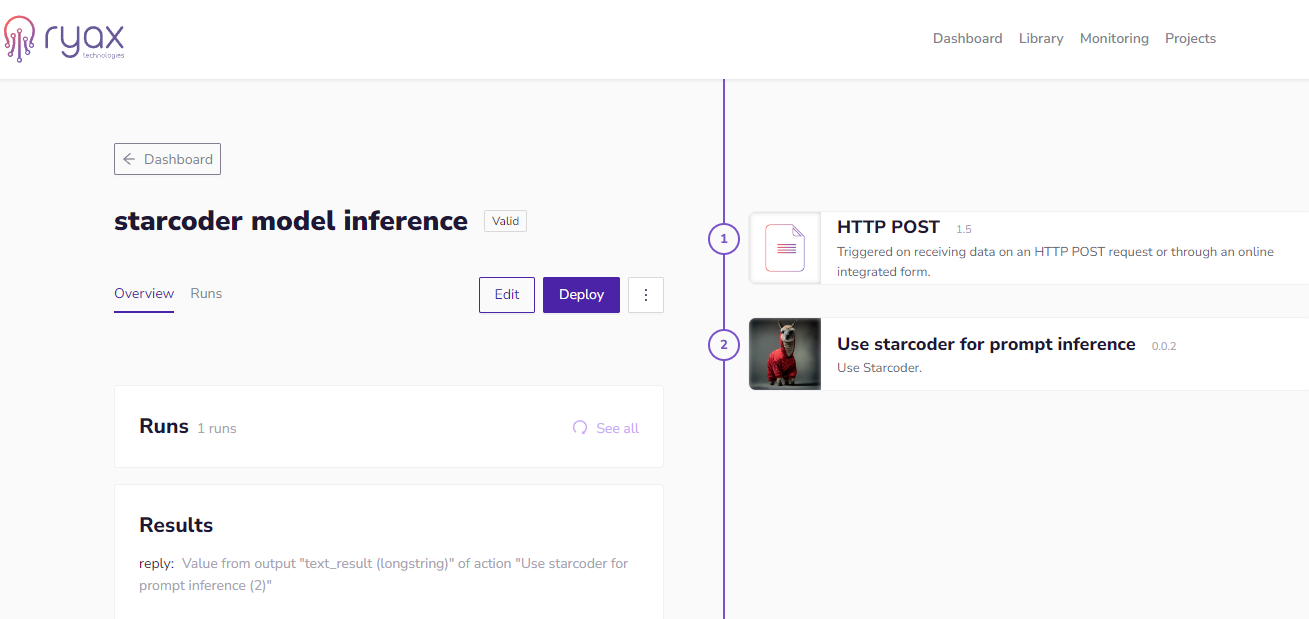

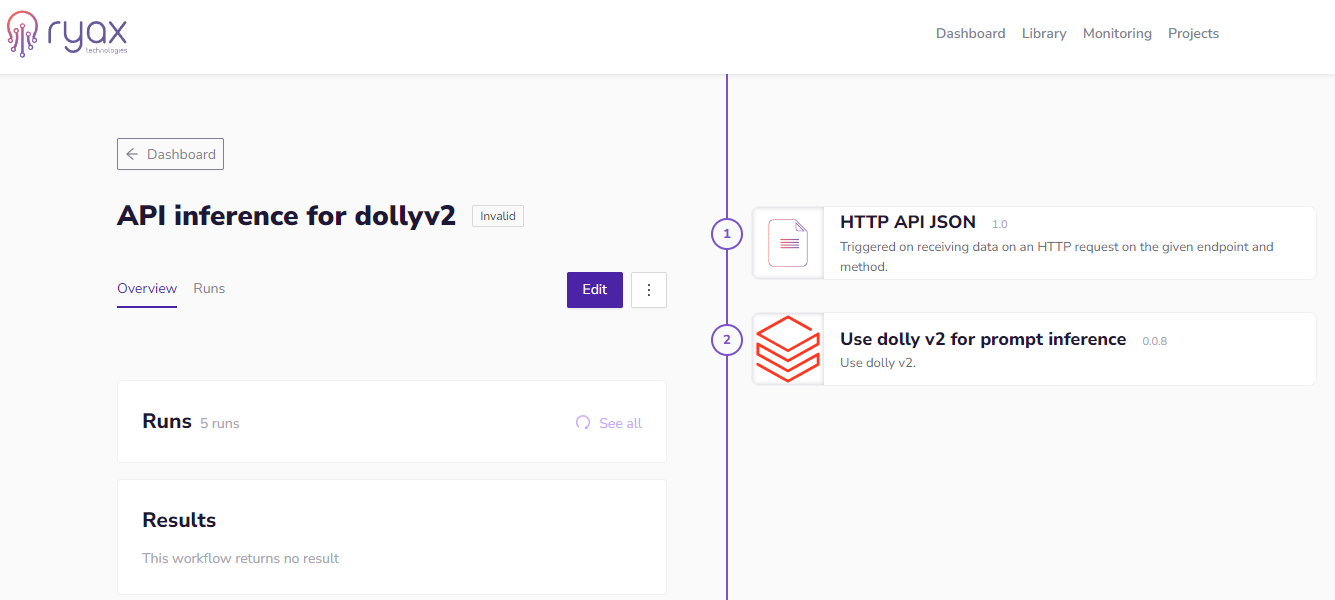

Ryax enables you to build LLM applications, design data workflows and APIs, pre and post processing without managing infrastructure nor code deployment

Time to market: Large Language Models are a key competitive advantage in today's technology business. The rate of improvement of these models is rapid, and staying up to date is vital. With Ryax you can ensure you're using the most fitting model for your use-case, tune your model with custom data, with all the CICD build in to the Ryax platform!

Usage simplification: Usage of no-code and low-code makes it more simple and faster to build your application, using reusable actions for post and pre-processing, API requests, API security keys... Deployment is also as simple as possible, no code to manage, no environment to set up. Use Ryax and deploy anywhere.

Cost to market: The per-minute payment of most known Cloud providers' GPUs usage services along with complexity of using them (data engineering expertise) keeps the cost of using GPUs for inference and fine-tuning on known Cloud providers' quite high. Ryax Serverless GPUs feature enable a per-second payment for GPUs which through the automated software stack environment preparation, the adapted autoscaling capabilities and the fine-integration in Cloud applications and APIs creation enable low-cost usage of custom AI services.